Blockchain Sharding 101

Ethereum’s Cancun upgrade will feature a scaling solution called proto-dankharding. It’s a good occasion to dive into sharding, how it’s implemented by blockchains like NEAR, TON, and Zilliqa,and why Ethereum rejected its original sharding design.

TL;DR

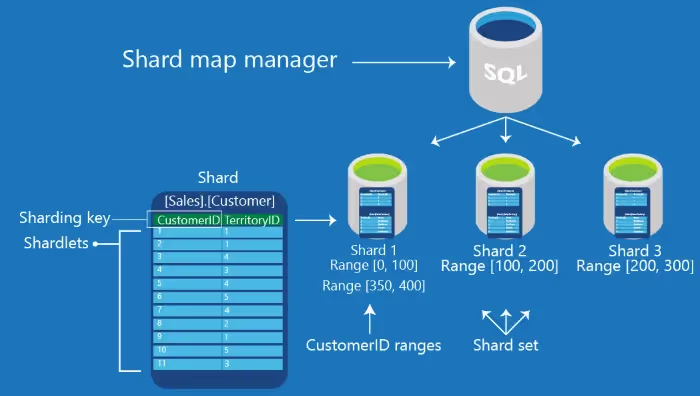

- The concept of sharding comes from traditional IT, where it means splitting a database into several smaller subsets (shards) that are stored and handled by separate servers.

- In blockchain, sharding can improve scaling, especially in networks where each validator has to process every transaction. Effectively, you split the blockchain (state, storage, transaction processing, or all of these) and assign each shard to a group of validators, with some sort of a central chain linking them all.

- Blockchain sharding is challenging. You need to decide how exactly to split the network, how to record shard blocks on the main chain, how to ensure communication between shards and their security, etc.

- Ethereum has had sharding on its roadmap since 2018. The original plan (2018-2022) was to have 64 shards with at least 128 validators in each, reshuffled every epoch (6.4 minutes). In 2022, the roadmap changed in reaction to the rise of rollups. The new design is called danksharding, and its first version will be proto-danksharding, to be introduced in the Cancun-Deneb (Dencun) upgrade.

- Proto-danksharding introduces blob-carrying transactions, where blobs (Binary Large Objects) are chunks of raw data that can’t be read by the EVM. Rollups will send transactions to Ethereum as cheap blobs instead of writing them on the mainnet as expensive calldata. Blobs will be stored on the Beacon Chain for only a few weeks.

- Cancun was supposed to go live in October 2023, but it will probably get delayed to 2024.

- The first blockchain to implement sharding in practice was actually Zilliqa in 2017. It has four shards, each processing a specific type of transactions. The whole thing is presided over by the Directory Service Committee. However, each node still has to store the whole blockchain state.

- The Open Network (TON) has a more advanced sharding model: the number of shards can grow or shrink, and their potential number is truly massive.

What is sharding in traditional IT?

Sharding means splitting a dataset into smaller parts that can be stored and processed separately. It’s widely used in traditional IT to manage very large applications that would cause a single server to lag or freeze.

We’re used to talking about scaling in the context of blockchains, but it can be a problem for centralized databases, too. Popular applications receive thousands of queries a minute, far more than any blockchain or dApp. Eventually, the CPU or RAM memory will get overloaded and overheat, the network will run out of bandwidth, or the server will run out of storage.

There are two approaches to database scaling: vertical and horizontal.

- Vertical scaling means increasing system capacity by adding processors, memory, or storage space to the existing servers. However, there are practical limitations to such upgrades, and if something goes wrong, the whole database will become inaccessible.

- Horizontal scaling means adding servers (nodes) as an application grows. It’s easy, and you can have as many machines as you need. The costs of maintaining the data center (server cooling, electricity etc.) will also increase, but in a predictable way. But, horizontal scaling usually requires additional coding. In general, this method is recommended for databases with more than 1,000 users.

Furthermore, horizontal scaling can be done in two ways: replication and sharding.

- Replication involves creating copies of the entire database and storing them on multiple machines. Changes are made only to the primary copy and are automatically replicated in the copies. This method increases fault tolerance and system availability (if some servers break down, the database will still work), but it doesn’t help to deal with growing database size.

- Sharding means dividing a database into smaller subsets (shards) that all live on separate servers. Each machine processes queries sent to its assigned shard and doesn’t deal with other shards (known as a shared-nothing architecture). Sharding is supported by leading database providers like Amazon Web Services and MongoDB.

Sharding is the most affordable solution for growing databases, but comes with several issues:

- Fault tolerance: if one of the servers fails, the rest of the database will keep working – but you won’t be able to use the data in the affected shard.

- Load distribution: if you put the most popular data in a few shards, they can still get overloaded with queries and freeze, while other shards are underused.

- Data distribution: not omitting any data and not replicating the same piece on several machines.

Now that you understand the concept of sharding, let’slook at sharding in the blockchain space.

Sharding in blockchain

Scaling a blockchain vs. scaling a centralized database

A blockchain is a decentralized database that needs to handle queries (transactions) like payments and smart contract calls. But unlike a centralized database on a single powerful server, a blockchain state resides in thousands of copies across all of its validator nodes – most of them much less powerful than a traditional data center server.

You could say that a blockchain network already uses horizontal replication by design. Some nodes can go offline and the blockchain will keep operating. But, while replication ensures fault tolerance, it doesn’t help with high workload.

In fact, scaling for higher workloads is a much bigger problem in blockchains like Ethereum than in centralized databases, because every blockchain validator has to process every transaction. It’s a cornerstone of transparency and security, but the result is a network that’s only as fast as a single node.

The challenges

Since a blockchain is a database, it can be sharded. Each validator is assigned to one shard and processes only the transactions related to that shard. As nodes can now divide the workload instead of having to process all the transactions, you can avoid bottlenecks and achieve the ultimate goal: scaling.

At the same time, every node requires less storage space, as it presumably doesn’t need to store the whole blockchain state. This allows more users to run nodes and increases decentralization.

Sounds good, right? There are several serious decisions to be made, though.

- Partitioning logic: Blockchain sharding is more complicated than having some dApps live on one shard and other dApps on another. Are you going to split just transaction processing among the shards, or will they also store separate parts of the blockchain state?

- Collation: All the data recorded on different shards will need to be collected in a single place, which can be called a beacon chain, a mother chain, a masterchain, etc. You’ll also need to come up with an economical way to record the information about shard blocks.

- Communication: How do you enable the transfer of data and assets between shards and the dApps that run on them? For example, if Bob has an account on Shard A and wants to use a dApp that resides on Shard B, how will he do that?

The two main solutions to this are synchronous and asynchronous sharding. In synchronous sharding, when a transaction involves two shards, the blocks that will include it have to be produced simultaneously (or at least added to the same slot on the mother chain/beacon chain).

In the asynchronous approach, the shard where the transaction originates (i.e. the one that the money is sent from) processes the transaction first. The second shard receives some sort of confirmation and then processes its part, “crediting” the money to the recipient.

- Security. A single shard has fewer validators than a whole unsharded blockchain, making it easier to attack and take over. Basically, if you need 51% of the votes to take over a chain, then on a blockchain with 1000 validators it translates into 510 validators; but if you split the network into 10 shards with 100 validators each, you’ll need just 51 votes to stage a successful attack.

Sharding in action: Ethereum, ZIlliqa, TON, NEAR

Now that you understand how sharding works in blockchain, let’s see how different blockchains implement it in practice.

Ethereum: from 64 shards to danksharding

The plan to introduce sharding in Ethereum was announced in April 2018, and now, five and a half years later, we are finally close. However, the final design, set to become part of the Cancun upgrade, is completely different from the original idea.

We’ll publish a separate deep dive into Cancun and its corresponding Beacon Chain fork, Deneb (“Dencun”). In this article, we’ll give you the gist.

ETH2 sharding (deprecated): the 64-shard network

According to the original plan, Ethereum was to be split into 64 shards: the current chain plus 63 more. Each shard was to have at least 128 validators. Every epoch (around 6.4 minutes), the Beacon Chain would reshuffle validators among the shards in a pseudorandom way, with one validator on each shard given the role of block proposer. In addition, 128 randomly selected validators would form a committee on the Beacon Chain.

This block proposer would create a shardblock (a collation of transactions) and propose it to the others for verification. If at least ⅔ of the validators attested (approved) a collation, its header would be sent to the Beacon Chain, and the block proposer would receive a reward.

In turn, the Beacon Chain committee would verify the attestations on all the block headers coming from different shards and form Beacon Chain blocks.

The first implementation phase would have shards as a data layer, without communication between them and without an ability to host smart contracts and user accounts. In the second phase, shards would be able to run dApps and host accounts independently and communicate with each other. Ethereum’s overall processing capacity would rise from today’s 27 TPS to 100,000 TPS.

The whole system would be very secure thanks to the pseudo random validator selection and constant reshuffling. An attacker would have a one-in-a-trillion chanceto take control over ⅔ of the validators in a single shard.

Danksharding and proto-danksharding: coming with the Cancun upgrade

In 2022, Ethereum developers came up with a totally different design following the explosion of optimistic and ZK rollups. Instead of splitting Ethereum L1 into shards, the plan is to create a cheap way for rollups to send transaction data to the L1.

The first version of this paradigm was “proto-danksharding,” described in the Ethereum Improvement Proposal (EIP) no.4844. The term comes from the names of two key contributors: Dankrad Feist and Protolambda (real name Diederik Loerakker).

Proto-danksharding will be the main feature of the upcoming Cancun-Deneb (“Dencun”) upgrade. It was supposed to go live in October 2023, but we may have to wait until 2024.

Why do we need proto-danksharding?

Rollups like Arbitrum, Optimism, and zkSync Era have to make their transaction data available on the Ethereum mainnet so that any interested validator (“prover”) can verify that the rollup operator isn’t committing fraud. The problem is that this information is currently sent using calldata and every non-zero byte of calldata costs 16 gas. The rollup transaction fees that you pay as an end user are almost entirely L1 calldata fees.

Danksharding proposes an alternative: wrapping rollup transaction data into “blobs” (Binary Large Objects). They will be attached to special blob-carrying transactions instead of using the calldata field. A blob consists of the body and a small header and can be up to 125 kilobytes, which is a lot for Ethereum.

Blobs can’t be read by the EVM and won’t come into contact with it. As they land on the mainnet, the Beacon Chain will just check that the data in them is available. They will be stored on the L1 only for a few weeks or a couple of months, though other participants like rollup operators can keep storing blobs as long as they want.

To verify blob data, proto-danksharding uses a cryptographic scheme known as Kate-Zaverucha-Goldberg (KZG). It consists of applying a polynomial function to the data in a series of points to get the value of the function in those points (“commitment”).

A rollup operator posts a commitment together with a blob. A prover can apply the same function to the same points to check that the values match. The fact that the prover doesn’t have to check the whole blob but only a few points makes this a cheap and efficient way to verify rollup transactions on the mainnet.

It’s important for rollup operators and provers to come up with a series of points that nobody else will know. The procedure is called a KZG ceremony, and Ethereum recently held a huge one, with over 141,000 users contributing random numbers to create a truly secure string.

By the way, if all this seems very complicated, wait for our detailed article on Cancun and danksharding.

Another interesting thing about proto-danksharding is that it separates block builders and block proposers. Builders are validators with powerful hardware who will place bids to form a new block. The proposer will select the highest bidder, and the winner will be responsible for producing the whole block.

Will Ethereum gas fees go down?

Proto-danksharding won’t affect the gas fees on Ethereum mainnet, but it should cut rollup fees by as much as 100x. That’s why many expect L2 chains to benefit and even rally after the Cancun fork.

Zilliqa: only simpler transactions are processed by shards

Back in 2015, Zilliqa’s team authored what appears to be the first-ever paper on blockchain sharding. Launched in 2017, Zilliqa ($ZIL) is thus positioned as “the first sharding-based blockchain.” It’s also EVM-compatible.

Zilliqa has four shards that produce “microblocks”. Every epoch, nodes are reshuffled among the shards, but there needs to be at least 600 nodes per shard.

Some of the nodes also participate on the Directory Service (DS) Committee. They collate microblocks into proper Transaction Blocks; determine which node will join which shard; generate special DS blocks; and process complex transactions that aren’t assigned to shards (see below).

The important thing to understand about Zilliqa is that it shards transaction processing but not network storage: each node still stores the whole blockchain state. How transactions are assigned to shards depends on the type of transaction:

- A simple payment between two users: assigned to ashard based on the first few bits of the sender’s address.

- A user interacting with a smart contract: same as above. If the last two bits of the user’s and the contract’s address are the same, the transaction will go to one of the shards.

- All other transactions are processed by the DS Committee. This includes those in which the last 2 bits of the addresses are different, as well as complex interactions, such as when a user calls a contract, which calls another contract.

Zilliqa relies on Proof-of-Work, and the fees are a bit higher than you’d expect from a sharded blockchain. It costs $0.1 to transfer tokens and NFTs and $0.01 to send ZIL. Finality time is around 30 seconds – a far cry from Aptos’ sub-second finality, for example.

The team is working on Zilliqa 2.0, which will have a new sharding model. Developers will be able to play with small customizable shards, setting their own security rules and finality time, and nominating validators.

Each shard in Zilliqa will also have an encryption key, allowing projects to build private shards whose data only they can decrypt. A long-term objective is to add ZK proofs to allow shards to share data in a private and compliant way. Right now, there is little communication between shards in Zilliqa.

TON: dynamic sharding and millions of smart contracts

TON (The Open Network) started out as Telegram Open Network, a brainchild of Nikolai and Pavel Durov, the creators of Telegram. After Telegram had to close down the project, it was picked up by the community and is now run by the TON Foundation. TON is not EVM-compatible and uses a smart contract language called FunC.

TON’s sharding model has three very interesting features.

- The number of shards changes dynamically: it can both increase and decrease. If the transaction load becomes too large for a specific shard, it splits in two; but if the load falls, shards can be joined together. TON is the only L1 to support shard merging.

- The sheer potential number of shards is enormous. TON can have up to 2^30 workchains – that’s over 1 billion. In turn, every workchain can be split into up to 2^60 shards. The total potential number of shards in the system would be an incredibly large 2^90. According to the TON blog, each person on the planet would get over 100 million shards.

For now, there are just two workchains: BaseChain, where regular transactions are processed, and MasterChain, which gathers information about the general blockchain state in its masterblocks.

Anyone can create a workchain, but it is rather costly, so for now all projects on TON are content to run on the BaseChain.

- Smart contracts can also be sharded – divided into many small instances. This way, if a workchain is split into shards, different instances (parts) of a contract can end up on different shards and yet the contract will continue to work.

How many instances there should be is up to each smart contract engineer to decide. When dealing with a fungible token, you can have an instance (essentially a separate contract) for every user balance, plus a parent instance to host the basic information on the token. If there are 1,000,000 token holders, there would be 1,000,001 smart contracts in total just for that token. Similarly, every NFT in a collection has its own smart contract on TON, in addition to the collection smart contract.

Like Aptos, TON already has what’s needed to scale But the actual load isn’t high: as of October 2023, TON processes less than 1.5 transactions per second.

NEAR: splitting a single transaction between shards

NEAR’s sharding protocol is called Nightshade, and its introduction is split into four phases. Phase 0, Simple Nightshade, launched in November 2021 and involved splitting the blockchain state in 4 shards. The list of transactions in a block is split into chunks, one chunk per shard.

The shards aren’t separate chains, though: every NEAR block contains all the information about transactions from all the shards, and every validator (block producer) tracks all the shards.

Phase 1, Chunk-Only Producers, launched in September 2022 and sharded transaction processing as well as the blockchain state. Now, for every block and every shard, a node is assigned to produce the chunk of transactions that corresponds to that shard. So with fourshards, you have four chunks per block, created by four different producers.

A chunk-only producer doesn’t produce full blocks and doesn't approve anything. They don’t have to stake a huge amount of NEAR or to download the full network state. A computer with 200 GB of free space and 8 GB of RAM should be enough for the task. As of July 2023, NEAR had 100 staking validators and 119 chunk-only producers.

Phase 2, or Nightshade proper, will do away with the need for all validators to verify all the shards. Finally, Phase 3, or Dynamic Resharding, will enable splitting and merging shards as needed – something that TON can already do. Phases 2 and 3 were originally scheduled for 2022, but as of September 2023, there is no clear date set for implementation.

We’ll keep you posted on the progress of sharding in Ethereum and other blockchains. Meanwhile, we have lots of updates and new features of our own coming up, including concentrated liquidity on Liquidswap and the new PontemAI chatbot. Follow us on Telegram, Twitter, and Discord and stay tuned!

About Pontem

Pontem Network is a product studio building the first-ever suite of foundational dApps for Aptos. Pontem Wallet, the first wallet for Aptos, is available for Chrome, Mozilla Firefox, Android, and iOS.

Use Pontem Wallet to store and send tokens on Aptos. The wallet is integrated with our Liquidswap DEX, the first DEX and AMM for Aptos, Topaz and Souffl3 NFT marketplaces, Ditto and Tortuga liquid staking platforms, Argo and Aries lending protocols, and all other major Aptos dApps.

Our other products include the browser code editor Move Playground, the Move IntelliJ IDE pluginfor developers, and the Solidity-to-Move code translator ByteBabel– the first ever implementation of the Ethereum Virtual Machine for Aptos.

.svg)